How do UX professionals conduct research?

Context

This generative research study was commissioned by Delta CX with the goal of developing a potential SaaS solution that aims to tackle some of the research process inefficiencies. Our focus was on understanding what kind of challenges do UX professionals face, how are they currently addressed by existing market solutions, and what could be improved further. The starting point of our investigation was participant recruitment because of its tedious and time-consuming nature.

The research and design tasks were carried out predominantly by apprentices. In terms of research specifically, each of us worked with our own data sets and could do all activities independently. This was done deliberately in order to be able to showcase each apprentice’s individual results and to also more effectively address each person’s knowledge needs and gaps. We were all mentored by Debbie Levitt, a UX veteran with 20 years of professional experience, who also checked and gave feedback on our work.

Research goals

- Explore existing market solutions, their offerings, strengths and weaknesses;

- Understand how UX research is conducted;

- Analyse the different process steps, tasks and sub-tasks;

- Identify and document potential audiences, their pain points, needs and motivations;

- Learn what tools UX professionals use and in what context;

- Suggest approaches to address identified gaps and opportunities;

Team

Debbie Levitt – CX and UX strategist, researcher, architect, and trainer

3 Research apprentices (including myself)

2 Interaction design apprentices

1 UI/Visual apprentice

1 UX Writer apprentice

My contribution

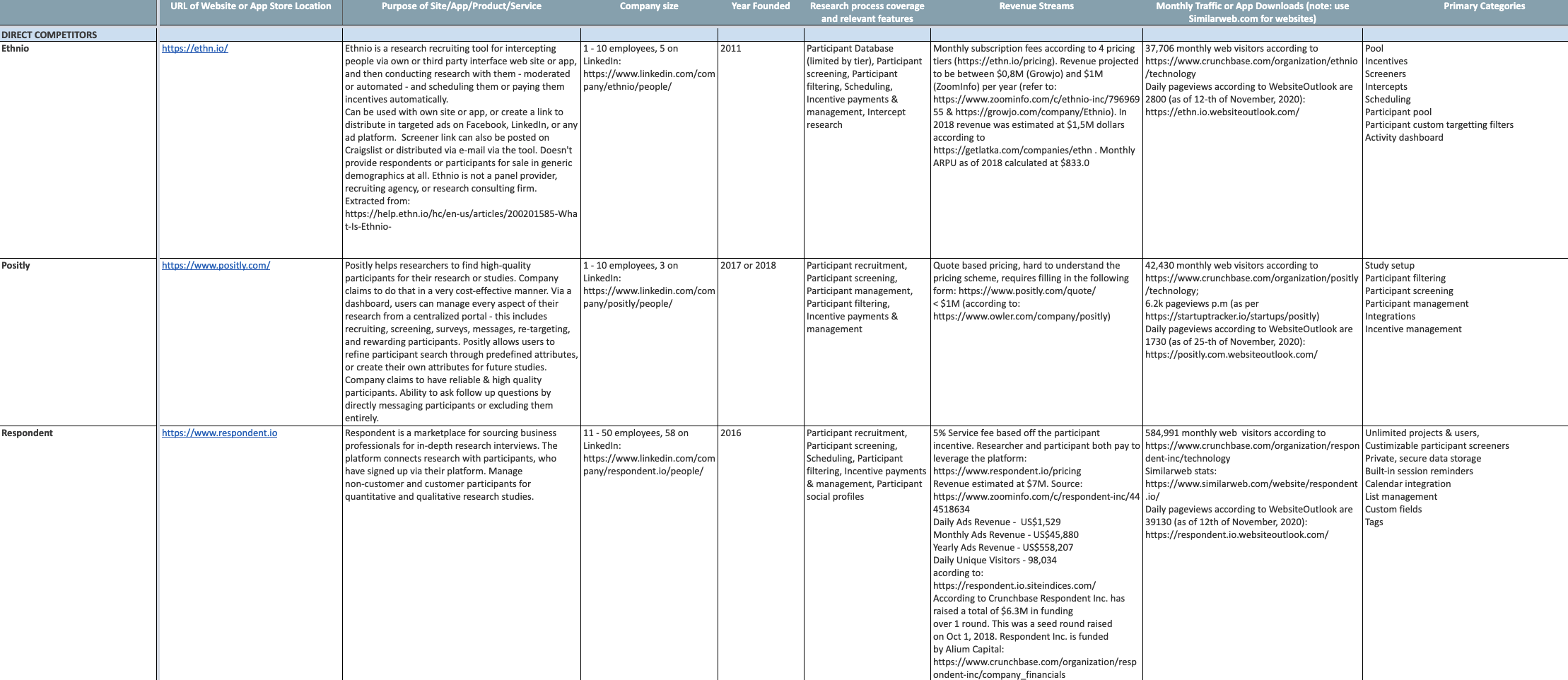

- Competitor analysis – fully responsible for a detailed competitor analysis of 10 research participant recruitment and management solutions;

- Discussion guide – proposed a list of 20 questions (main and follow up) to use during the interview sessions. Part of them were utilized in the final discussion guide version;

- Recruitment – coordinated with the other 2 research apprentices to recruit from a shared list of pre-screened individuals, where each potential participant we were interacting with could only be targeted by 1 apprentice. Each of us had to schedule and conduct sessions with at least 8 distinct participants;

- Semi-structured interviews – fully responsible for conducting 10 remote interview sessions with UX professionals;

- Notetaking and session transcription – transcribing the full interview sessions, adding comments and highlighting important sections;

- Task analysis – fully responsible for the outline of the UX Research process steps, broken down into 4 phases;

- Social media research – searched for terms, identified during the analysis on platforms like Twitter, Reddit and LinkedIn and gained additional perspectives from researchers around the world. Documented findings in the affinity diagram and report;

- Personas – described the 3 types of professionals that I interviewed and captured their needs, pain points and motivations;

- Affinity diagram – fully responsible for grouping the research findings and interview insights into various themes and topics;

- Research report – fully responsible for consolidating all relevant research information and recommendations into a single document;

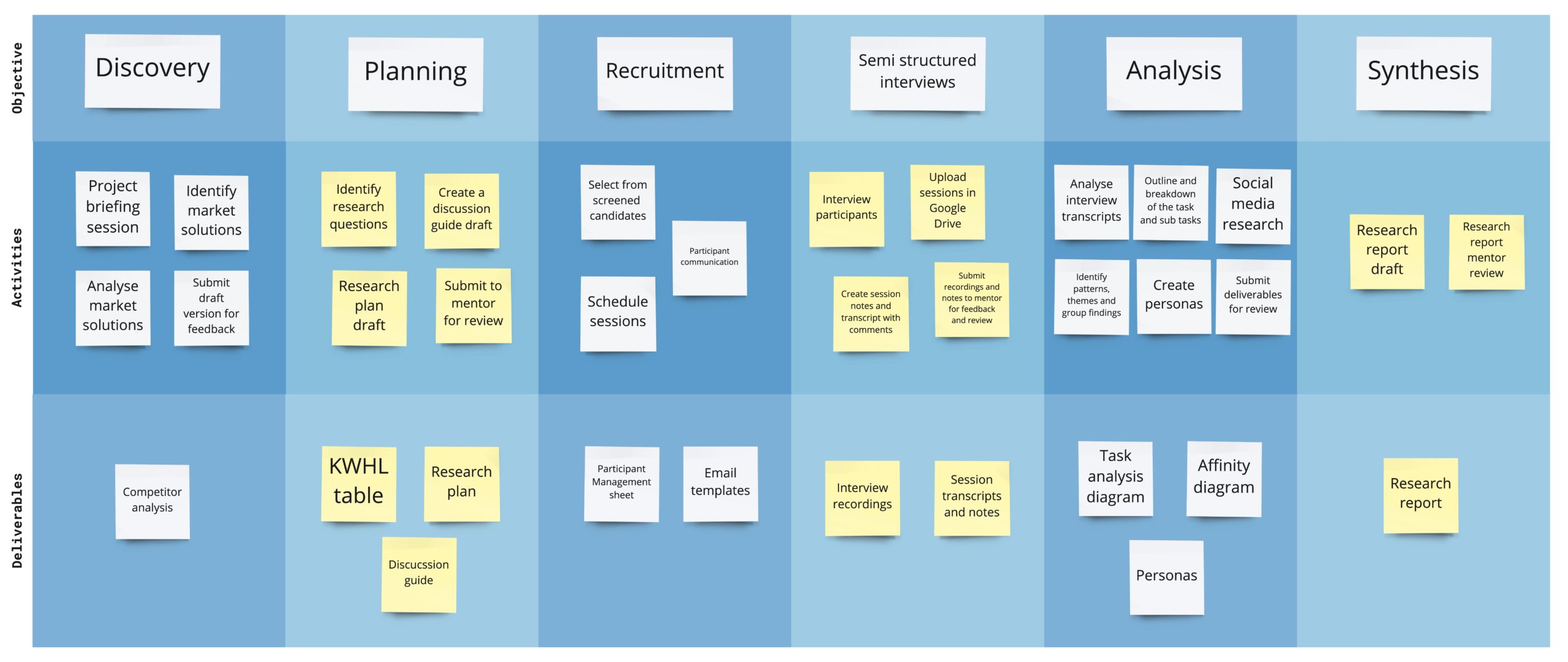

Process

This snapshot showcases the conducted research activities and doesn’t cover the design process.

Deliverables

Competitor analysis

Our mentor shared the names of 4 solutions to explore as a starting point. While researching them, I learned about several additional products, with a similar or relevant offering. I decided to spend more time and include them in the evaluation in order to gain a more holistic picture of the existing market landscape. This would in turn allow me to more effectively identify gaps in process coverage and later on, combined with the interview insights – a realistic impression of the best focus areas and opportunities for our solution.

My first competitor analysis version included the originally requested 4 products and the second iteration built upon this and included 6 more tools. I used a simplified version of Jaime Levy’s competitor analysis template in Excel, because of its incredible granularity and comprehensive structure.

Task analysis

This process diagram is based on 11 interview sessions, 10 of which I conducted myself. The additional interview was carried out by Debbie Levitt and was shared with all apprentices for reference.

Each major phase is broken down into optional and usual activities, where “optional” are actions and tasks that were mentioned by some of the participants and “usual” are tasks, which were common across most of the participants. While not universally true and with a significant number of possible variations, it nevertheless represents an accurate overview of the steps, sub-tasks and tools, which characterize the research process.

The task analysis was created in Miro and took approximately 3 weeks to complete.

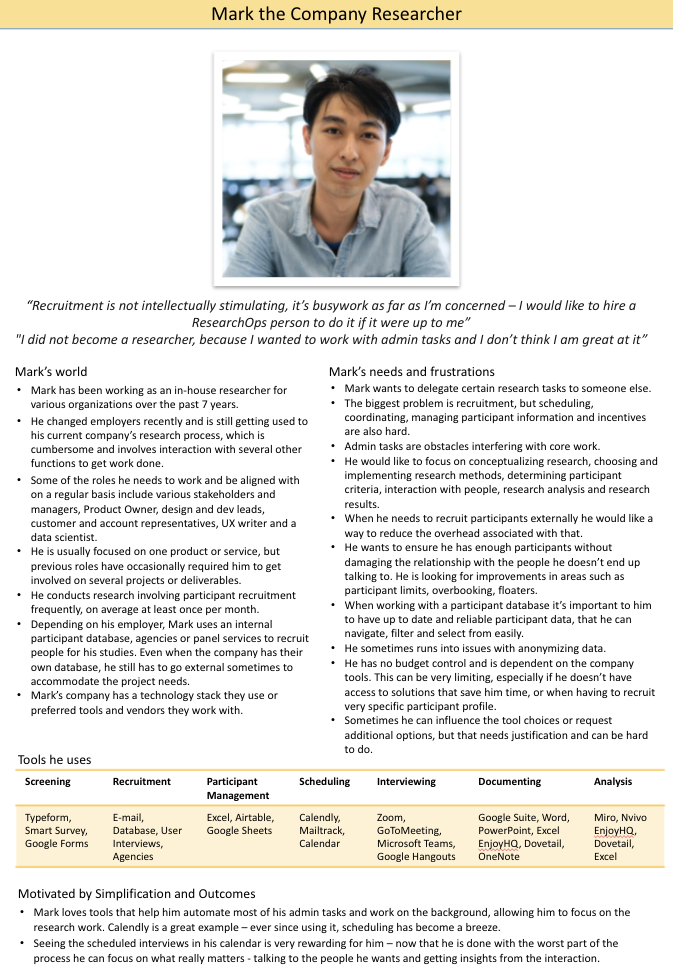

Personas

The interviews I personally conducted revealed 3 types of potential users – Research consultant, Company, and Academic researcher. I was also able to identify 2 other relevant profiles based on mentions from interviewees and discussions with fellow apprentices, however those required additional research and sessions with the specific segments in order to make an accurate summary of their pain points, needs, and motivations.

Each persona file covers in detail the circumstances, constraints, tools, needs, pain points, and motivations of the identified user archetype. This deliverable was completed after the data analysis over the course of two weeks.

One of the personas is showcased below, the other 2 can be shared separately upon request.

Company researcher

Those are usually in-house experts, who are employed in organizations and tasked with research work. They either work on a project full time, are embedded on a team or product, or alternate between different company initiatives.

Key pain points:

- Admin tasks – participant recruitment, scheduling and managing participant information are consuming a lot of their time. This limits their availability to do research and analysis work significantly.

- Lack of choice – they rarely have control over the company chosen systems and tools, regardless of whether they help them or deter them in their work.

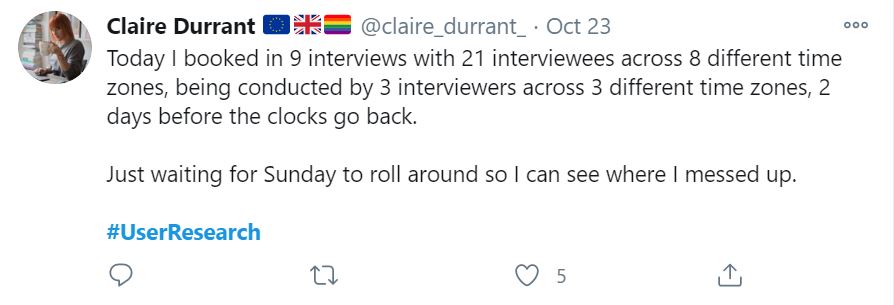

Social media research

This particular research direction was a result of my own initiative as I wanted to explore and compare impressions I got from the semi-structured interviews to what other practitioners were sharing over social media. I was curious to see whether the threads and themes identified during the analysis were recognizable by other practitioners in the field.

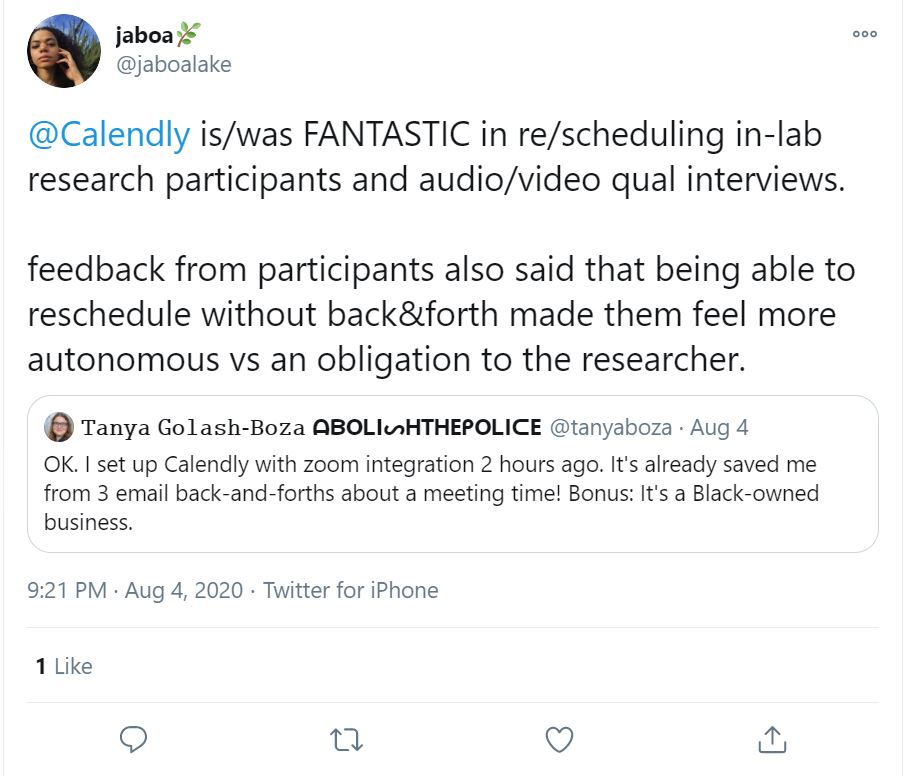

This proved to be quite relevant and helped me discover additional quotes and statements to support multiple findings. While doing this, I also learned about tools that help collect and process data from social media sources and used a scraper called Reaper to do text searches across Twitter.

Challenges

Estimates

Problem:

This was my first time doing participant recruitment, personas, task analysis, and affinity diagram. As a result, my estimates of how long each deliverable would take were optimistic.

Solution:

Document how much each task actually took, compare to my assumptions, and look for ways to optimize the process. For example, for some of the interviews, I used the free version of Otter to get a partial transcript (or a full one in certain cases) and save myself time.

Recruitment

Participants were recruited from Debbie Levitt and the apprentices’ personal networks. The goal was to spend time with UX professionals, who were willing to attend an hour-long session without an associated monetary compensation and helping up and coming UX-ers get experience interviewing. Among all research apprentices, we had conversations with 26 people in total.

We were working from the screener results and contacted different individuals separately. Each research apprentice interviewed at least 8 practitioners. One of the participants’ interviews was shared with all research apprentices and is included in each of our data sets.

Problem:

This was an unpaid study and as a result, it was harder to find people who were willing to attend an hour-long interview session.

Solution:

With agreement from Debbie, I extended the recruitment timeline by an additional month and opened interview slots during the weekend. Reached out to my personal network to get more participants. Posted the screener on Reddit and various UX Slack groups. Sent reminders to some of the participants and offered to shorten the session length to 30 minutes.

Problem:

Multiple outgoing invites and different participant time zones required robust calendar management.

Solution:

Used an alternative to Calendly called Meetingbird (discontinued in December 2020) to better manage my availability and make it easier for participants to sign up for our sessions. In order to understand the time zone differences and open up appropriate time slots, I used a website service called Worldtimebuddy.

Key Findings

The 55-page report outlines the research process steps, gaps, and conclusions on significant topics like planning, recruitment, scheduling, participant management, tools, etc. The findings listed below are shared with permission.

- Participant recruitment requires significant effort, regardless of the chosen method. Panels, in-house databases, and agencies create the illusion of actual process simplification. In reality, they focus on shortening the timeframe between specifying a participant type and finding potential matches.

- Comprehensive scheduling significantly improves the researcher’s quality of life. Solutions like Calendly are getting a lot of use, traction, and recognition, because they remove the back and forth with participants, simplify calendar management, tackle time zone issues and automate meeting creation.

- The research process is characterized by a significant number of manual activities – f.e. participant selection and management, discussion guide preparation and refinement, incentive handling, and more. Industry professionals would gladly delegate, outsource or automate any number of those so that they can focus better and free more of their time for analysis and synthesis work.

Project Outcomes

The insights from my competitor analysis helped shape the product business model and identify ways in which it can stand out from other market players. My research and analysis work was extensively used in the design of several application modules and heavily influenced the solution approach for a number of product features, including but not limited to participant recruitment, management, and scheduling.

This project helped several aspiring UX-ers like myself gain practical experience in the field under mentor supervision. Through interviewing senior researchers I got a good grasp of the research workflow and tasks. I also learned how UX professionals currently handle each process step, what tools and workarounds do they use in their day to day and what are their unmet needs. This proved to be useful from a product standpoint and for my personal growth as a researcher.

The project is currently pending additional financing, after which development work can continue.

Project Takeaways

1. Automate transcription whenever possible

Transcription is a very time-consuming activity, which from a researcher’s standpoint is better spent doing other tasks. While it’s really great for familiarisation with the data, a lot of existing AI solutions offer quite a high accuracy for a reasonable price at the fraction of the cost of a human transcriber. By using Otter I was able to reduce the amount of time spent processing interview session results by more than 50%.

2. Social media is a great way to get additional insights

While social media insights are harder to navigate, find, and collect, they were instrumental in strengthening some of the themes and conclusions discovered during the interviews. Twitter quotes and snippets were especially relevant with their bite-sized digestible format and in-depth threads.

3. More collaboration

The project was an excellent way to gain practical experience in the field but lacked sufficient collaboration opportunities. Because each of us was using our own data set and spoke to different participant types, we couldn’t really combine the research without spending time watching each other’s interviews and reviewing our work. This was not feasible because of timeline constraints, differences in time zones, and individual availability, so after a few collaboration sessions, we handed over our deliverables separately.